Adaptive User Perspective Rendering for Handheld Augmented Reality

Abstract

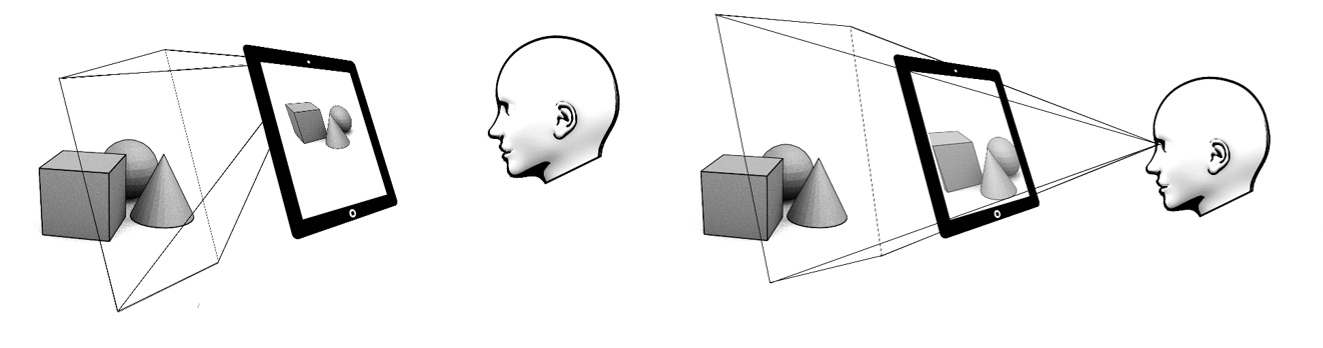

Augmented Reality (AR) applications on handheld devices often suffer from spatial distortions, because the AR environment is presented from the perspective of the camera of the mobile device.

Recent approaches counteract this distortion based on estimations of the user’s head position, rendering the scene from the

user's perspective.

To this end, approaches usually apply

face-tracking algorithms on the front camera of the mobile device. However, this

demands high computational resources, and, therefore, commonly affects the performance of the application beyond the already high computational load of AR applications.

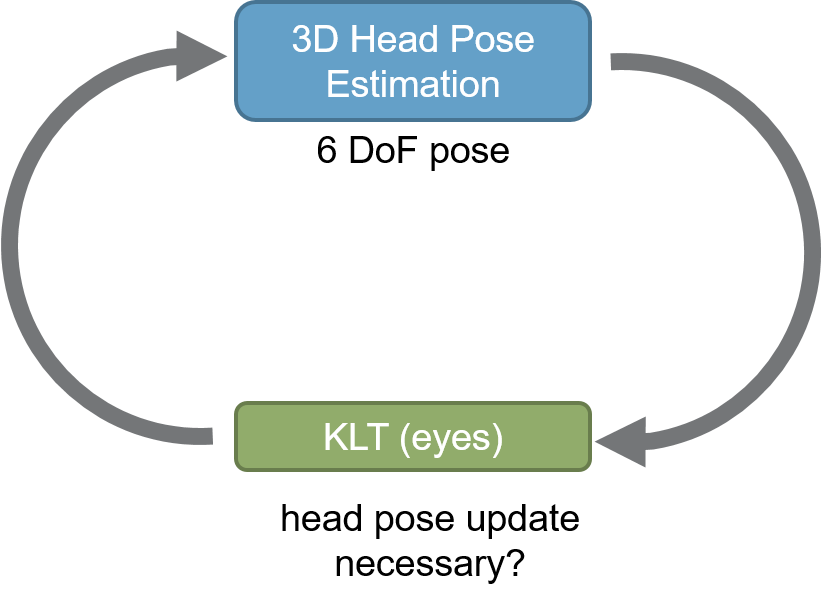

We present a method to

reduce the computational demands for user perspective rendering by applying

lightweight optical flow tracking and an estimation of the user’s motion before head tracking is started.

We demonstrate the suitability of our approach for computationally limited mobile devices and we compare it to device perspective rendering, to head tracked user perspective rendering, as well as to fixed point of view user perspective rendering.

Media

Publications

- Peter Mohr, Markus Tatzgern, Jens Grubert, Dieter Schmalstieg & Denis Kalkofen. Adaptive User Perspective Rendering for Handheld Augmented Reality. In Proceedings of IEEE 3DUI 2017, March 18-19 2017 (pp. 176-181).

Honorable Mention for Best Paper | paper | presentation